A Squirrel Is Stuck Between An Ocean And A Hard Place

Approaches to AI in virtual systems and Minecraft

Yesterday, while wandering around the mLab and procrastinating between deciding to go to bed at a reasonable hour or stay up and work on midterms, I came across something I have seen many times in my Minecraft play. A small non-agressive mob was stuck between the ocean and a ledge on a cliff, with no means of escape. By small, I mean a mob that is smaller than half of a game “block” (Minecraft’s “standard” unity of measurement) and in this particular case the mob was a squirrel, a colourful addition to Minecraft’s bestiary from one of the mLab’s 130 odd mods. Either it spawned on the ledge or had fallen there, and because of its small size, it was stuck on the ledge, doomed to fall into the ocean if it tried to escape.

Thus the squirrel simply sat there.

In meatspace, or the real world, the squirrel would die of starvation if the squirrel had found itself on that ledge. Perhaps the meatspace squirrel would be motivated by some instinct to attempt to climb the impossibly steep dirt and rock cliff facade.

But in Minecraft, the squirrel is doomed to its fate until the system despawns the chunk of server land that this drama is unfolding upon. The squirrel is doomed to sit there on that ledge, ostensibly for as long as the server chunk is loaded, for the rest of time.

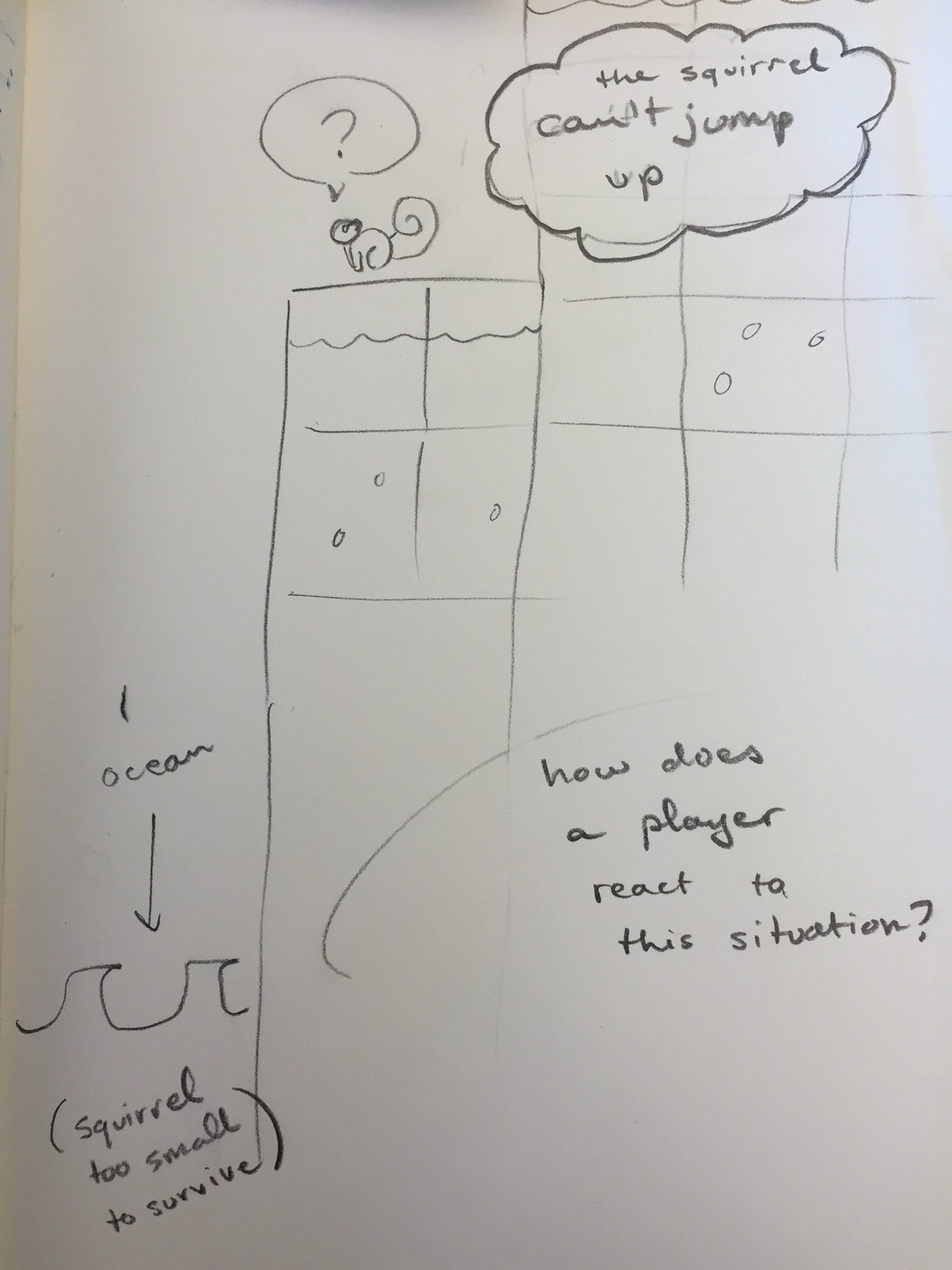

Because I wasn’t thinking of taking screenshots of the stranded Minecraft squirrel last night (sleep deprivation, tsk tsk) I decided to illustrate the situation for you with a sketch:

The squirrel is in a tough situation. As I was fluttering about the server, and I saw this squirrel, my immediate impulse was to dig a little path into the cliff’s rocky face, so that the squirrel could escape to the woods above and go about its squirrelly business.

Why would I do that? Why so much empathy for a Minecraft squirrel?

The squirrel, after all, is just an artefact of computer code meant to do things that I, as a human playing Minecraft, can interpret as squirrel activity. This, and its aesthetic, cue my brain into seeing a “squirrel” as the manifestation of a bit of computer code. Without my interpretation and visual interaction with the squirrel, is that bit of computer code really a squirrel, or just a bit of computer code? If no one is around to interpret the squirrel’s immobile waiting on that rock ledge as panic and despair, is the squirrel really in any danger?

From the perspective of the strip of code governing the squirrel’s activity, that squirrel’s activity makes perfect sense. The computer code has judged the situation and asserted: “There is danger in every direction, but right here is safe. So the squirrel object won’t move. Success!”

It is my interpretation that the squirrel is stuck, and because I think a squirrel should be frolicking about in the woods, I have this idea that the squirrel’s activity sitting on a ledge is somehow unsatisfactory. I am moved to change the circumstances and give the squirrel an opportunity to rectify its activity.

In an interview between filmmaker Gina Harazsti and Dr Darren Wershler (I was lucky enough to see a preview of the footage last week) professor Wershler asserts that the reason we care so much about “cute mobs” in Minecraft is because of nostalgia. We don’t care about skeletons or creepers stuck on ledges with nowhere to go. We are nostalgic about squirrels in Minecraft because we are nostalgic about real squirrels. Is it that we want to play out a fantasy as a human emissary to an animal society in danger? There’s a lot of writing on “cuteness” and its evolutionary function. Are we playing out real-world biases via nonhuman mobs in Minecraft – because the squirrel has a fluffy tail and is so small, are we confusing two very different nonhuman “creatures” – one of flesh and fur, the other an artefact of software.

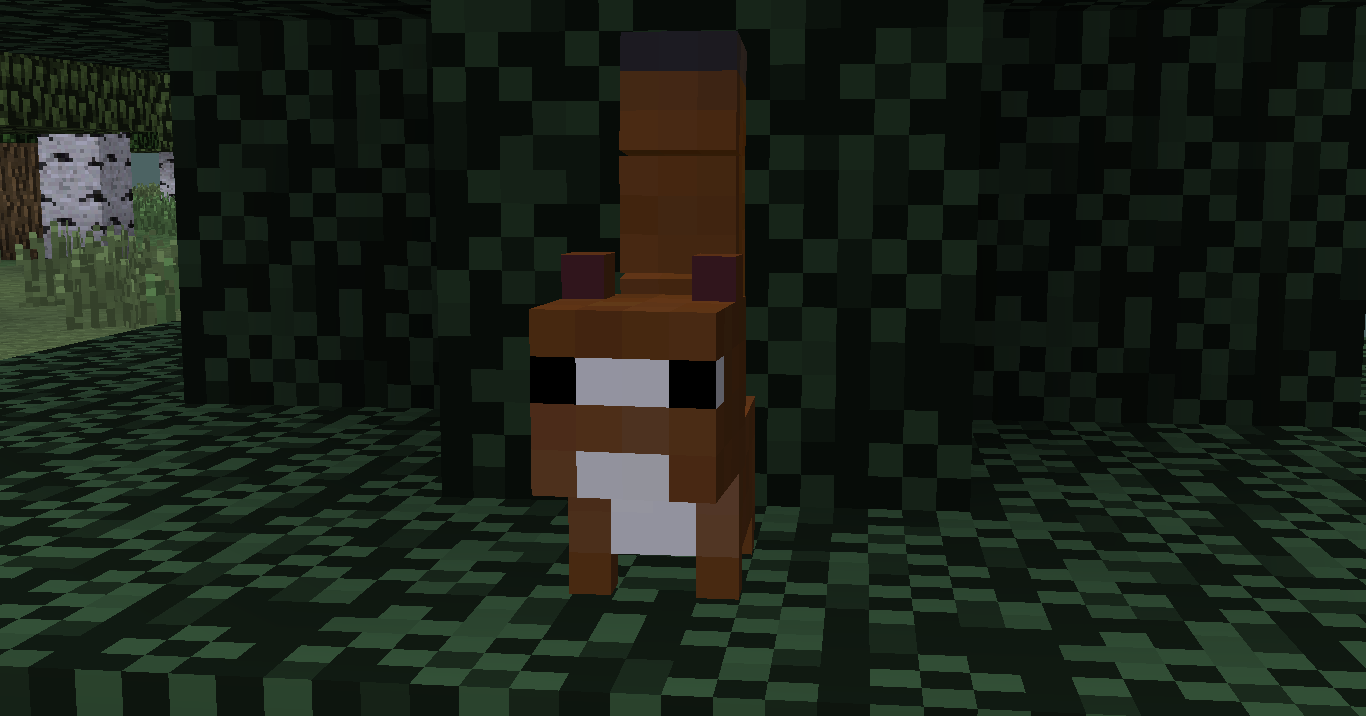

SO CUTE AND FLUFFFEEEEEEEEEE

When artificial intelligence research and cognitive science were both in their infancy, a piecemeal approach was developed by some researchers who were hoping investigations of artificial intelligence would be a useful tool to reproduce “human” intelligence. In his report Intelligence without representation by Rodney A. Brooks, where Brooks describes research done at the Artificial Intelligence Laboratory of the Massachusetts Institute of Technology, the paper asserts flat out: “Artificial intelligence started as a field whose goal was to replicate human level intelligence in a machine.” This original goal was gargantuan – but there was hope that in isolating the different “pieces” or components of cognition, we could replicate them with mechanical processes whose “brains” would be software. Brooks’ report was published in 1987 and as such presents a particular picture in time for artificial intelligence research – however I have found it interesting to compare some of the points he makes on the breakdown of artificial intelligence into units performing various functions, to the nonhumans of Minecraft.

I examined the points Brooks makes about artificial intelligence research concerned with making robots in the real world, rather than making software interacting in a virtual space. He mentions game spaces with the famous “blocks world” example :

In the late sixties and early seventies the blocks world became a popular domain for AI research. It had a uniform and simple semantics. The key to success was to represent the state of the world completely and explicitly. Search techniques could then be used for planning within this well-understood world. Learning could also be done within the blocks world; there were only a few simple concepts worth learning and they could be captured by enumerating the set of subexpressions which must be contained in any formal description of a world including an instance of the concept.

Brooks however criticizes the heuristic problem solving methodologies used in the “blocks world”, stating they are too simple for serious, epistemological artificial intelligence research. It is perhaps difficult to conceptualize what the “blocks world” was, as it was for me when I first started investigating it, and I stumbled upon SHRDLU – an early natural language understanding computer program. It is basically a programming software that learns to make “blocks” of concepts containing subexpressions. For instance, in a “blocks world” software, you could teach the software that chicken is a type of meat, and that meat is a concept “block” worth remembering for further interactions. An example from SHRDLU illustrates this:

Person: PICK UP A BIG RED BLOCK.

Computer: OK. (does it)

Person: GRASP THE PYRAMID.

Computer: I DON'T UNDERSTAND WHICH PYRAMID YOU MEAN.

Person: FIND A BLOCK WHICH IS TALLER THAN THE ONE YOU ARE HOLDING AND PUT IT INTO THE BOX.

Computer: BY "IT", I ASSUME YOU MEAN THE BLOCK WHICH IS TALLER THAN THE ONE I AM HOLDING.

Computer: OK. (does it)Person: WHAT DOES THE BOX CONTAIN?

Computer: THE BLUE PYRAMID AND THE BLUE BLOCK.

Person: WHAT IS THE PYRAMID SUPPORTED BY?

Computer: THE BOX.

Person: HOW MANY BLOCKS ARE NOT IN THE BOX?

Computer: FOUR OF THEM.

Person: IS AT LEAST ONE OF THEM NARROWER THAN THE ONE WHICH I TOLD YOU TO PICK UP?

Computer: YES, THE RED CUBE.

Person: IS IT SUPPORTED?

Computer: YES, BY THE TABLE.

Person: CAN THE TABLE PICK UP BLOCKS?

Computer: NO.

Person: CAN A PYRAMID BE SUPPORTED BY A BLOCK?

Computer: YES.

Person: CAN A PYRAMID SUPPORT A PYRAMID?

Computer: I DON'T KNOW.

Person: STACK UP TWO PYRAMIDS.

Computer: I CAN'T.

Person: WILL YOU PLEASE STACK UP BOTH OF THE RED BLOCKS AND EITHER A GREEN CUBE OR A PYRAMID?

Computer: OK. (does it)

Person: WHICH CUBE IS SITTING ON THE TABLE?

Computer: THE LARGE GREEN ONE WHICH SUPPORTS THE RED PYRAMID.

Person: IS THERE A LARGE BLOCK BEHIND A PYRAMID?

Computer: YES, THREE OF THEM: A LARGE RED ONE, A LARGE GREEN CUBE, AND THE BLUE ONE.

Person: PUT A SMALL ONE ONTO THE GREEN CUBE WHICH SUPPORTS A PYRAMID.

Computer: OK. (does it)

Person: PUT THE LITTLEST PYRAMID ON TOP OF IT.

Computer: OK. (does it)

Person: DOES THE SHORTEST THING THE TALLEST PYRAMID'S SUPPORT SUPPORTS SUPPORT ANYTHING GREEN?

Computer: YES, THE GREEN PYRAMID.

Is the computer software actually learning something? It certainly simulates the process of learning. It knows that it can 1) do an action 2) admit the action is impossible, or that it can 3) answer a question in the affirmative or 4) answer a question in the negative or state 5) admit ignorance. But if you taught the computer thousands of different concepts (cubes, pyramids, pasta, cookies, ducks, stellar cartography) broken down into “blocks” of “knowledge” represented by “strings” – would the computer software actually be able to execute commands other than the 5 outputs available above? The simplifications of the world and the types of input-output available are too far removed from real-world abstractions and complexities to hold any weight.

I return to my Minecraft squirrel drama on the ledge. The squirrel program running inside Minecraft could be having a similar conversation to the one archived by SHRDLU :

Squirrel: I am sitting here.

Computer: You are indeed sitting here.

Squirrel: Should I move forward?

Computer: You should not. There is a vast ocean and you will drown.

Squirrel: Should I move left?

Computer: You should not. There is an ocean there too.

Squirrel: That sucks. Maybe I should move right?

Computer: Nope. Ocean all around, amigo.

Squirrel: Awww man. Shall I move backwards?

Computer: There is a block of earth there.

Squirrel: What about jumping? Can I jump onto the block of earth?

Computer: Alas, you can’t. There is a block of earth there too.

Squirrel: So… I should just sit here?

Computer: Sounds good to me.

The squirrel, like the SHRDLU program, takes “input” from the world (in this case, the computer is controlling what is in the world, but in the SHRDLU program, the world seems largely defined by the human-defined input.) The squirrel program is totally cool just sitting there, because it has gone through its programming and figured out that in order to stay alive (which squirrels in Minecraft apparently like to do) it should stay out of the water. The squirrel will never learn to dig through the earth or climb against the facade to get out. The program is quite content to execute its programming and chill.

So when I feel pangs of sadness that a squirrel is caught on the ledge, that is my human abstraction of what is going on. The squirrel program is actually quite happy where it is. But wouldn’t the squirrel also be quite happy to move around, too? When I’ve watched Minecraft mob patterns, I’ve noticed that almost all creatures feel an impulse to move around. If I unleash a pack of chickens into the wild, they’ll meander away eventually, often splitting off into different directions. Squirrels are similar, bounding away happily in the world. I have dug into the process of “artificial intelligence” in the mobs of Minecraft, I still feel the need to wonder – would the squirrel prefer to be moving, and does it consider that state ideal? I project the human emotion “happiness” onto the bounding squirrels and chickens of Minecraft – but could that be a fanciful description of something actually happening within the hardware.

While I know that aesthetically, I am sympathizing with the squirrels and chickens of Minecraft because they are kind of cute, I also have to wonder if from an artificial intelligence point of view, something deeper may be occurring under the surface. Of course, I can terminate the Minecraft program and walk away from the stranded squirrel completely. Brooks argues that “toys” offer too simplified a domain for serious study, and it is perhaps anachronistically futile to use an AI research paper from 1987 to contemplate upon Minecraft, a game released in 2009.

I am intrigued, reading Brooks, when he lists the engineering methodology behind building Creatures: “completely autonomous mobile agents that co-exist in the world with humans, and are seen by those humans as intelligent beings in their own right.” These artificial organisms would exhibit the following properties:

- Timeliness (as in reaction time)

- Robustness (minor changes in the properties of the world will not lead to a total collapse of the Creature’s activity or behaviour)

- Goals (the Creature should be able to maintain multiple goals, and, depending on the situation, prioritize one goal over another, as well as take advantage of fortuitous opportunities)

- Purpose (a Creature should do something in the world, should have some purpose in being)

In many ways, the Minecraft mobs satisfy these four requirements. The squirrel prioritizes staying alive over bounding about happily, the squirrel would jump up and try to flee if I hit it with my battleaxe, the squirrel reacts if I dig a path in the earth for it to escape the cliff and the squirrel has a purpose : it is a denizen of the forest, and makes the forest complete.

However, where Brooks and I may differ on interpretation of the concept of a Creature is in the words “co-exist in the world with humans” – players may enter the world of Minecraft, but are they really “in” the world of Minecraft? As pieces of software, does the squirrel program really “exist in the world” – is its physical existence as a tiny component of a hardrive a big detractor from its software, virtual manifestation?

The squirrel of Minecraft is an artefact running on the software foundations of a lot of artificial intelligence research. We can pause or “disable” the squirrel program, or the SHRDLU program, and when we re-engage the program it does not restart from scratch necessarily – depending on how Minecraft decides how mobs are loaded and respawned into chunks, that same squirrel may reappear, and reassess that it is still stuck between the ocean and a hard place. But is it really the same squirrel? Is it really manifesting a portion of intelligence? Would it prefer to be bouncing about happily rather than stuck on the ledge?

Or is this all besides the point – is the more interesting investigation with regards to how the player responds to the situation. So I ask you the question: if you found the squirrel stranded on the ledge, would you do anything about it? And why?